Figure 1: your computer on low memory

TL;DR: yes. You can throw more swap at most processes and it’ll eventually finish… Eventually.

Last year I warranty-ed a Dell XPS 13 with 32 GiB of RAM, all specced out. Sidenote: I wouldn’t recommend the Dell XPS 13, at least in 4K. The laptop gets anywhere from 1-3 hours of real world usage and gets hot as most Macbooks. The Dell XPS 13 4K is not a viable product.

So there I was without a laptop. I pulled an item from my eBay retail inventory. Meet the Lenovo IdeaPad Flex 5. It’s a sub $400 tablet-notebook convertible. I wasn’t sure what to expect. The laptop has an i3 and 4 GiB of RAM. I upgraded the SSD so I can underprovision storage - great for SSD longevity and performance. Not to mention many filesystems work better with some free space.

Initial consideration was to take my XPS 13’s install and migrate it to new hardware. This wouldn’t work due to ZFS usage on the XPS with its generous RAM allowance. ZFS needs a bit of spare RAM to operate smoothly so I opted to set up BTRFS instead.

§Why ZFS or BTRFS?

Figure 2: zfs & btrfs

I’m human and like to accidentally delete important files. Sure I can go back to my nightly backups, but why, when you can use a filesystem that totes low-overhead snapshots like ZFS/BTRFS? This means when I delete a file, I can visit a snapshot folder on the machine, retrieve the important file and nobody will be the wiser. Every desktop should work this way. This sure beats carrying out a restore from remote backups. If you don’t have backups, please go find the nearest hammer, and smash your computer. If you don’t feel bad, your data isn’t important, but if you do, now you know what it feels like to go through life without backups - it hurts to lose data.

Another reason for ZFS/BTRFS is you can replicate your filesystem efficiently.

An rsync is extremely slow compared to a zfs send or btrfs send. It’s just

doing a lot more work (reading files, getting permissions, walking directory

trees, etc), whereas the filesystem can just dump all blocks as they appear on

disk down the pipe.

Finally, you get some basic disk integrity checks. Both ZFS and BTRFS checksum every block which means you have a fairly good idea that your data is safe and not damaged (e.g. due to a failing disk or bad memory flipping bits). If you lose a file no problem, you will be able to pro-actively restore from backup or better fix your (failing) hardware.

§NixOS has a bad default for low memory users

If you install NixOS on a low memory host, you’ll realize soon enough NixOS

does some surprising things with its /run/users/* mountpoints. Each user

will get a tmpfs with a max capacity of 10% of your RAM. This means on my 4GiB

system my XDG runtime directory will be at max ~400 MiB. The catch is Nix will

use this directory to build derivations (i.e. nix-build) when running

nix-shell -p ... or similar. Quite a few derivations either require

compilation from source or need to pull down a large file from the internet,

then save it to the nix store. There is a work around - set your TMPDIR

variable to /tmp. This line in your configuration.nix would do:

environment.variables.TMPDIR = "/tmp";

This should be the default. You might think this is an acceptable default, however, consider Libreoffice needs literally 6+ GiB to build, and other big applications need even more storage to build. That means you need at least 64 GiB of RAM to use NixOS with this ill-informed default setting, without surprises or extra configuration.

§No tmpfs on /tmp for you

Just to be clear, it’s probably a bad idea to have a tmpfs on /tmp if you

don’t have a couple gigabytes to dedicate to tmpfs usage. In my case I rather

not dedicate any memory for tmpfs usage, so my /tmp is on the SSD, instead.

Personally 8-16 GiB for /tmp is more than plenty. This should allow most

nix-build jobs to complete too.

§Bloated apps kill the performance

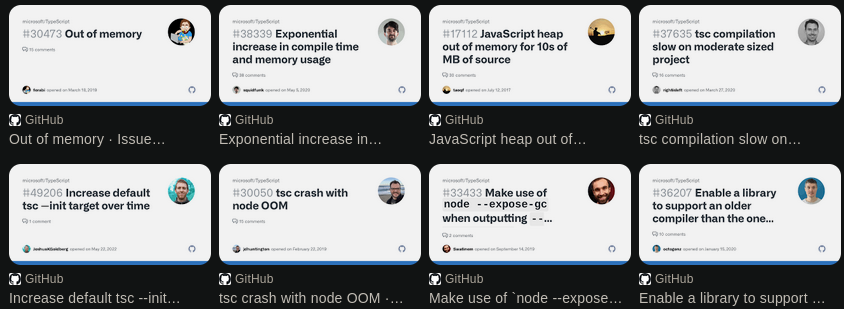

Figure 3: Folks complaining about typescript memory usage on GitHub

Surprisingly the most bloated app I’ve used on this laptops is Typescript’s compiler. tsc likes to use a couple gigabytes of resident memory (actual ram, not just pages swapped out to disk). It’s fatter than firefox or thunderbird, which are the second and third most demanding “bloat monsters”.

Another surprise was unison - a bi-directional file synchronization app. I use it to sync my homedirs, with most of the configs synced too. Unison will happily use at least a Gigabyte of RAM. I didn’t look into it further. I suspect the memory usage is proportional to the number of files being compared and checked prior to syncing.

And this goes without saying, Racket likes to use significant memory just starting a REPL, let alone using the various utilities I have written in Racket. 1

Despite all these bloaty apps, the computer will happily chug along - you’ll have to wait for pages to circulate back in from swap, if you’re running too many Firefox profiles or the Signal electron app. But hey, it works!

§At a glance metric

For a long time now, I’ve been a believer of using Linux’s loadavg to assess at-a-glance load of a system. This works for memory usage too, in that when you run out of physical memory, the OS will repurpose buffers and cache for application usage. This in turn will slow down many operations, due to cache misses pushing more IO work back to the SSD, with its orders of magnitude slower access times.

Additionally the Linux loadavg includes the number of processes waiting for IO, which will drive up the metric when constant SSD access is needed to offset the lack of memory available for caching and buffers.

I’ll remain with the loadavg in my statusbar. It currently reads 0.03 0.21 0.41 on my workstation. It’s a bit of a speedy box.

§Final Remarks

It’s a good idea to measure system resource usage over time. Netdata can you get you started without much effort. Another personal favorite is dstat (and its successor dool), a sort of sar-like/iostat alternative.

8 GiB of RAM might be the sweet spot for responsive computing. Sure more memory is always good, as long as your OS doesn’t leave it as “free” - in such case that’s just underutilized useless memory. My desktop has 128 GiB of RAM with 90 GiB of that RAM completely free at the moment. This is an example of a bad purchase - could have gotten half the capacity with faster RAM speeds. Oh well! VMs are a joy on this machine.

Despite Dell doing a superb job on the XPS 13 warranty fulfillment and getting the machine in a repaired condition, it remains in box. I’m much happier with a potato laptop than this fancy XPS 13 with its bad battery and overly hot cooling solution.

-

I’ve decided to quit all my endeavors with Racket. It’s not doing it for me as an engineer building production software or hobbyist tooling. Someday I might write a blog post why this is the best choice for me. If you wish to take over any of my projects please contact me. ↩︎